From Flash to Fast: Google Gemini's Deep Research Update Revolutionizes Research

IMPORTANT NOTE:

I’m moving this newsletter from Substack to LinkedIn. If you aren’t already connected with me on LinkedIn, please do! My link is: https://www.linkedin.com/in/smithstephen/

The Newsletter is called “Intelligence By Intent” and I’m planning to publish 2-3x/week (and sometimes more). The link to subscribe on there is here:

https://www.linkedin.com/newsletters/intelligence-by-intent-7305304310756581377/

As always - that content will remains free. I hope you continue to find it valuable! Now - back to the program!

This past week, I was doing industry research for a client when Google updated its Gemini Deep Research tool. On a scale from 1-10, I would rate the first version launched earlier this year (based on the older Gemini Pro 1.5 model) a solid 4/10 – this update makes it a lot better!

If you haven't used these deep research tools yet, you should. The ability to drop in a topic and have these tools search hundreds of sites for you and synthesize information will save you hours and hours of laborious work. Tools like Gemini's Deep Research and OpenAI's Deep Research are sophisticated AI systems that autonomously conduct in-depth investigations on complex topics, leveraging web searches, data analysis, and information synthesis to deliver incredibly detailed reports.

Last week, Google quietly rolled out a significant upgrade to their Gemini platform, supercharging the Deep Research feature with their 2.0 Flash Thinking Experimental model. After spending several days putting it through increasingly demanding tests, I'm convinced this update represents a genuine step change in AI capability for business users like me.

From Pattern-Matcher to Research Partner

Remember when AI was fancy autocomplete? I do. When I first started experimenting with these tools in my consulting work about two and a half years ago, they were moderately useful for summarizing information I already had. Still, they fell apart when asked to conduct a nuanced analysis.

The joke with some of my friends used to be that using AI for market research was like sending an intern to do a VP's job - you'd get something back, but you couldn't really trust it, and you'd spend more time fixing than it saved you.

This new Flash 2.0 update to Deep Research feels different. During a recent project analyzing the Vertical ERP SaaS players for some niche industries, I noticed Gemini doing something I hadn't seen before. It wasn't just regurgitating information - it was breaking down the problem methodically, evaluating different factors, and building logical connections between disparate pieces of information.

"Hold on," I thought, "this is actually how I approach these problems."

The most striking difference was transparency. Rather than presenting conclusions as if they materialized from thin air, the model now shows its thinking process. When analyzing a potential market entry strategy for a client, I saw how it weighed competitive threats against market opportunities, considered regulatory factors, and built a coherent narrative.

It's far from perfect, but for the first time, I'm working with a research assistant rather than just a sophisticated search tool.

A Real-World Test Drive

Last Friday, I put the updated Deep Research feature to a real test. A PE client needed a comprehensive analysis of the vertical ERP SaaS market for very specific niche industries - the kind of multi-faceted research project that previously would have taken me days of work.

I fed Gemini Deep Research the basic parameters to structure the research. What came back was genuinely useful - not just a collection of facts but a structured analysis that identified key players, competitive positioning, key customers, and more.

The analysis maintained focus throughout, building logical connections between sections rather than treating each as an isolated topic. When I asked follow-up questions about specific segments, the model remembered the context of our previous exchange and provided relevant insights rather than generic information.

What impressed me most wasn't just the quality of the output but how it got there. The model's reasoning was clear and trackable, making it easy for me to validate its conclusions against my own industry knowledge.

"This is finally getting useful," I texted a colleague who had been equally skeptical about AI research tools. "It's not replacing us yet, but it's actually saving me time instead of creating more work."

Behind the Flashy Upgrade

I got curious about what changed under the hood of this update, so I dug into Google's technical documentation and pieced together the puzzle.

While they haven't revealed all their secrets, the Flash 2.0 approach fundamentally changes how the model reasons. Rather than generating responses in one shot, it implements a more sophisticated chain-of-thought process that breaks problems into manageable chunks, evaluates its progress, and adjusts course as needed. So you get much deeper and more nuanced answers with far fewer hallucinations.

Over coffee with a developer friend, she compared it to the difference between a student who memorizes facts versus one who understands underlying principles. "The second student can solve novel problems," she explained, "because they grasp the conceptual framework, not just individual pieces of information."

Real Business Benefits (Not Just Tech Hype)

I've sat through enough vendor pitches to develop a healthy skepticism about technology "revolutions." But after using this update extensively across different business scenarios, I'm seeing tangible benefits in several areas:

Market analysis has improved dramatically. Rather than providing generic industry overviews, Gemini now identifies specific market dynamics and competitive positioning opportunities with greater precision. Last week, it helped me identify a gap in the mid-market segment for a client's product that wasn't obvious from the raw data.

Trend forecasting feels more nuanced. The model now better connects technological, social, and economic signals to identify emerging patterns.

Competitive intelligence is more actionable. Instead of just comparing feature sets, Gemini now provides a more sophisticated analysis of competitive strengths and vulnerabilities. This can help develop more effective positioning strategies for a SaaS client entering a crowded market.

Deep Research is the first real Agentic use case that anyone can use

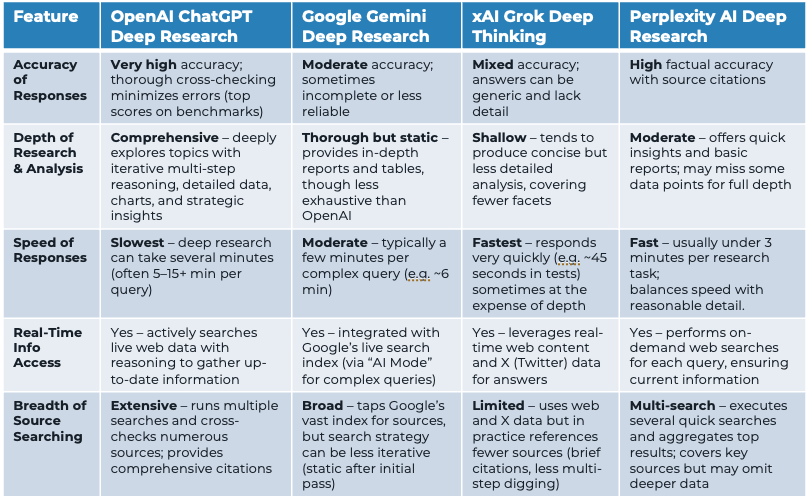

Google was the first to launch a deep research capability earlier this year, and OpenAI, Grok, and Perplexity quickly followed them. I've used all of the models quite a bit, and here's how I would summarize their current capabilities against each other.

OpenAI is still the undisputed leader across these four models in terms of accuracy, depth of response, and sheer volume of content that it delivers. If you are on the Plus subscription ($20/month) – you can run 10 of these a month (you can run 120/month if you are on the $200/month Pro subscription). The outputs are amazing.

Gemini Deep Research is a strong second-place contender, though – and for many use cases – it will be just what people need. It will create a 2,000-3,000 word research report for you on any topic you throw its way. It will analyze hundreds of sites and even tell you which ones it included and excluded from the analysis in the final output. It's also available to anyone on the $20/subscription. For that $20/subscription you get 20 deep research queries/day or 600/month. If I rate OpenAI as 9/10 on my personal rating scale, I would give this a solid 6.5-7/10.

Worth the Upgrade

A client recently asked if I thought Gemini's Advanced subscription was worth it for their research team. My answer: absolutely.

If you're primarily looking for basic information retrieval or simple content generation, the standard version remains capable. However, for those of us who regularly tackle complex research questions or need to analyze multiple sources of information, the upgraded Deep Research feature and access to the Flash Pro models provide substantial value.

What I find most valuable isn't just better outputs but the transformation in my workflow. I'm spending less time on initial information gathering and more time applying critical thinking to refined analysis. The tool has become a genuine productivity multiplier rather than just a fancy replacement for Google Search.

As I closed my laptop after finishing an analysis in record time, I realized something had fundamentally shifted in my relationship with AI tools. For the first time, I wasn't just extracting basic information - I was genuinely collaborating with the system to develop insights neither of us might have reached alone.

That's when I knew this update was different. The Flash 2.0 Thinking model, which powers deep Research, isn't just an incremental improvement - it's a glimpse of how AI can become a genuine thinking partner for knowledge workers. It's still early days, but for those of us who spend our days wrestling with complex business problems, this feels like a significant step forward.

Get in Contact

Steve is a Senior Partner at NextAccess and has worked with hundreds of companies to understand and adopt AI in their organizations. He has worked extensively with services firms (law firms, PE firms, consulting firms). Feel free to reach out via email: steve.smith@nextaccess.com

Want to talk about an AI workshop or personal training? Grab a 15-minute slot on my calendar: https://calendar.app.google/3ctoTDUgtg71TQDG7

AI Workshop Structure

What does a typical AI workshop look like? It’s a 3-hour session that covers the following (this example is from work I do with law firms – section 4 is tailored to your specific industry):

Learning Objectives:

Understand core concepts of AI, including model types, context windows, multi-modal AI, deep research, and reasoning models

Recognize roles within law firms where AI can be effectively implemented

Understand AI's capabilities and limitations, focusing on data privacy, security, and real-world use cases

Analyze practical use cases demonstrating AI's value in diverse organizational functions

Learn essential prompting techniques to optimize interactions with AI tools and enhance accuracy and relevance of results

1. Foundations (20 minutes)

AI ecosystem overview

Critical AI roles and capabilities for law firms

High-level model fundamentals for a legal audience (model capabilities, context windows, training cut-offs vs real-time search)

Detailed understanding of privacy and security model with a focus on how to use these and keep your client data confidential

2. Advanced Analytical and Data Management Tools (15 minutes)

Deep Research methodologies for complex arguments and analysis

NotebookLM for document storage, integrated search and voice-overview

Reasoning models and the impact on case strategy

3. General Business Use Case Examples (45 minutes)

Selected examples from GTM, Operations, Finance

4 . Legal-Specific Use Case Examples (60 minutes)

Drafting Motions in Limine for Trial Preparation

Crafting Custom Partnership Agreements

Summarizing Statutes for Legislative Updates

Formulating a Series of Interrogatory Questions

Writing Plain-Language Litigation Updates for Clients

Extracting Key Terms from Documents and Agreements

Drafting Thought Leadership Articles on Regulatory Changes

Creating Legal Documents (e.g. NDA)

Summarize Depositions

Video Testimony Analysis

Strengthening and Re-Writing Legal Arguments

Simplifying Legal Contracts

5. Prompting, Emerging Interfaces and Wrap-Up (40 minutes)

Prompting techniques for legal professionals

Emerging multi-modal capabilities (image, audio, video) and impact on workflow

Wrap-up/Q&A