Google Just Showed Us What AGI Will Look Like. It's Disguised as an Image Generator.

Nano Banana Pro doesn't guess. It reasons, critiques, and corrects itself before rendering a single pixel.

The Nano Banana Pro Moment

I thought I was done being surprised this year.

We’ve all had that fatigue lately. Every week, a new model drops. Every week, we’re told everything has changed. You nod, you subscribe, you go back to work. The magic fades.

Then, three days ago, Nano Banana Pro dropped. And for the second time this year, I stared at a screen and forgot to blink (the first time was when NotebookLM introduced Audio Overviews).

To be clear: “Nano Banana Pro” is the internet’s nickname for what Google is officially calling Gemini 3 Pro Image. But the code name stuck, and frankly, it fits. It’s weird, it’s playful, and it’s shockingly powerful.

Here is what it is, why it breaks the rules, and why you need to pay attention before your Monday morning stand-up.

What It Actually Is

Nano Banana Pro is a multimodal reasoning engine wrapped in an image generator.

Until last week, image AI was a slot machine. You put in a prompt, pulled the lever, and hoped for the best. If the hands were mangled or the text was gibberish, you pulled the lever again.

Nano Banana Pro doesn’t guess. It thinks.

When you ask it for a visual, it enters a “Thinking Mode” (similar to what we saw with reasoning text models earlier this year). It sketches, critiques its own work, corrects lighting physics, checks spelling, and then renders the final pixel. It does this in seconds.

The result is 4K resolution (in AIStudio), text that is actually legible (a first for this level of speed), and character consistency that holds up across twenty different generated images.

Why It Matters Now

The gap between “cool demo” and “business asset” just closed.

For the last year, if you wanted a consistent character for a brand campaign, you had to hire a specialist to train a custom LoRA model. It was technical, expensive, and slow.

With this update, that barrier is gone. Nano Banana Pro can hold up to 14 reference images in its working memory. You upload a photo of your product, your font, and your brand colors, and it nails them. Every time.

It’s integrated directly into Google Workspace as of Thursday. That means your marketing director doesn’t need a new login. They just open Slides, and it’s there.

What It Changes

The biggest shift is reliability.

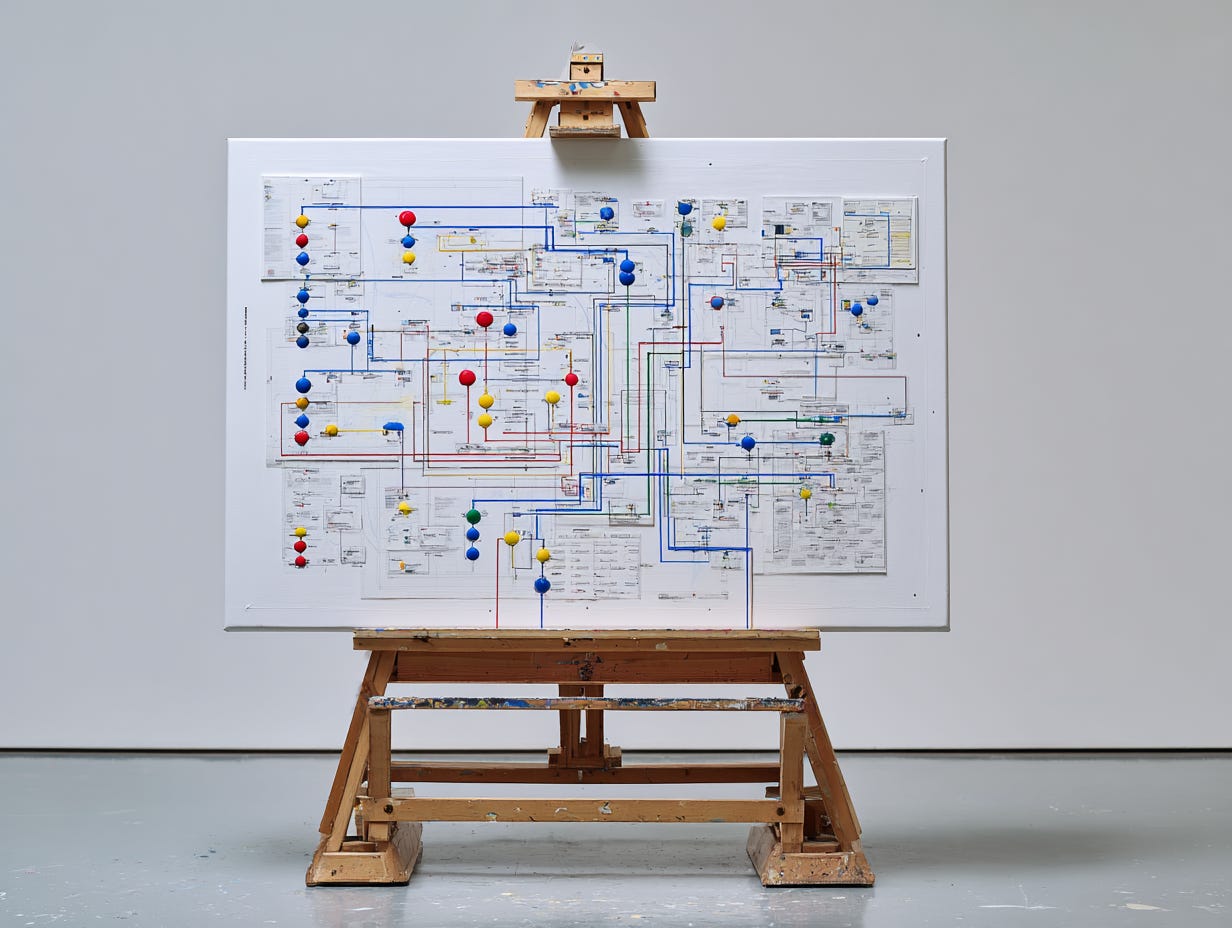

I played with the “Thinking” toggle on Friday. I asked for a complex diagram of a supply chain with labels. Old models would give me confident nonsense - words that looked like alien script. This model paused, “read” the logic of the supply chain, and spelled every label correctly.

Here is where the business value kicks in:

Zero-Shot Storyboards: You can generate a 10-frame storyboard where the protagonist wears the exact same shirt, has the same haircut, and the lighting matches frame-to-frame.

Localized Assets: It handles multilingual text perfectly. You can generate one ad creative and ask it to render the signage in Japanese, Spanish, and German without breaking the visual style.

The “Fix It” Button: It understands negative constraints. If you say “remove the coffee cup but keep the shadow on the table,” it understands the physics of why the shadow should remain (or change slightly).

My 12 Tests (And Why They Worked)

I spent the weekend playing with it (to be fair, I was also at F1 in Vegas, so my tests were a little bit limited). I wanted to see if the hype was real. Here are a dozen things I threw at it, ranging from practical to ridiculous:

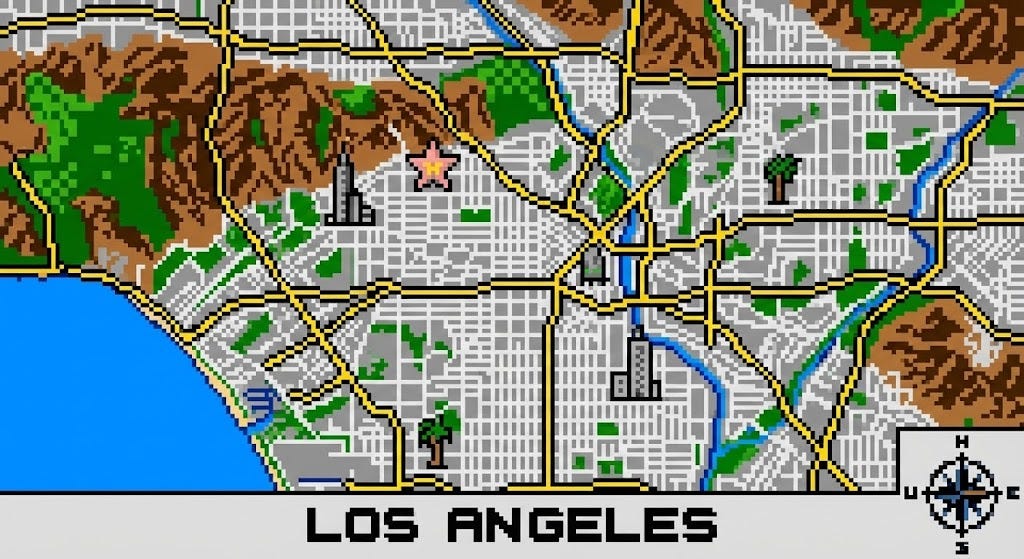

Show me a top-down cartography map of Los Angeles in the style of 8-bit retro video

Create a 3x3 with me and Magnus with funny poses in my car

Keep the original person (me) exactly as is and give me a UK passport with all details (NOTE: I don’t have a UK passport)

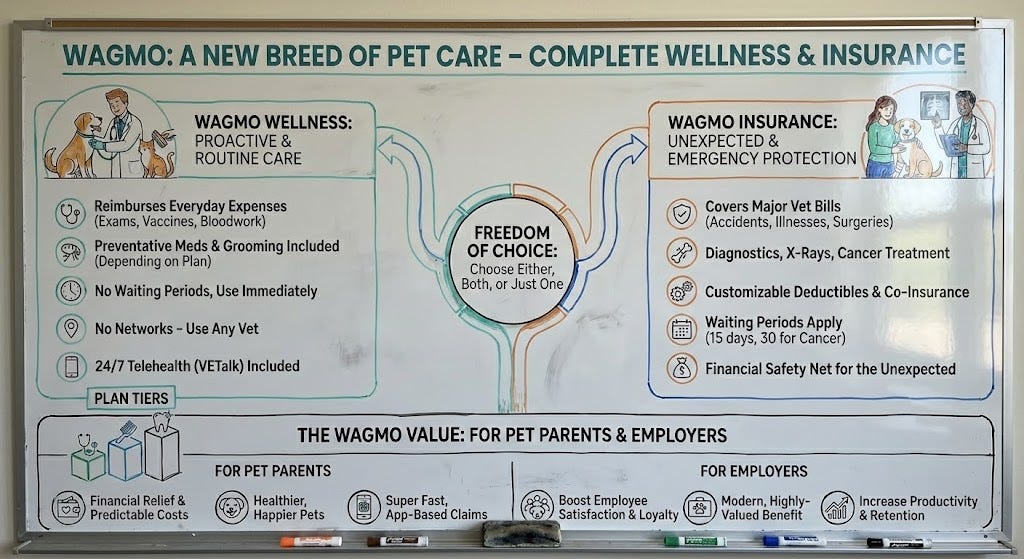

I’m working with a client, Wagmo (wagmo.io) - go create a compelling ad for them and do it as an infographic

Take that same content and transform it into the image of a professor's whiteboard. Diagrams, arrows, boxes, and captions explaining the core idea visually use colors as well.

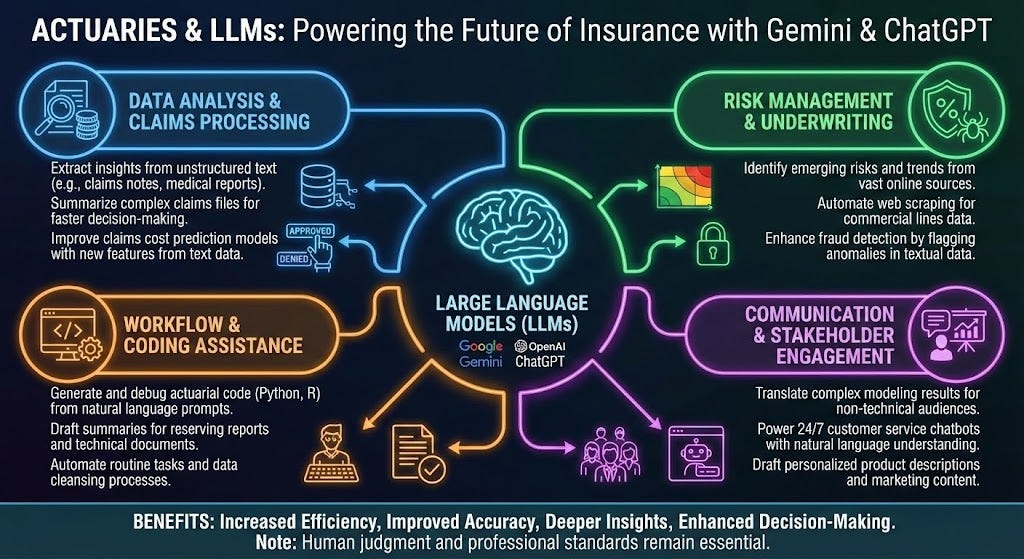

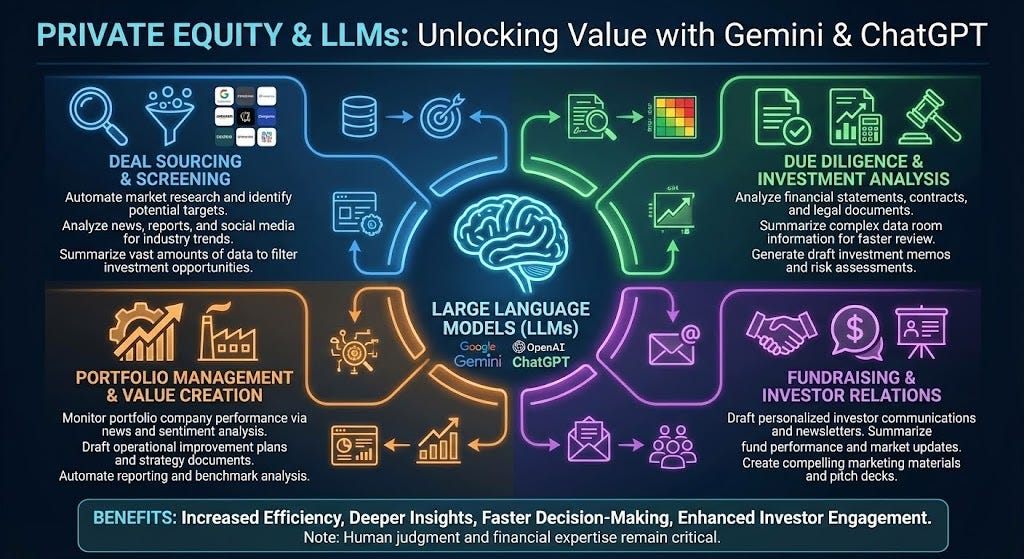

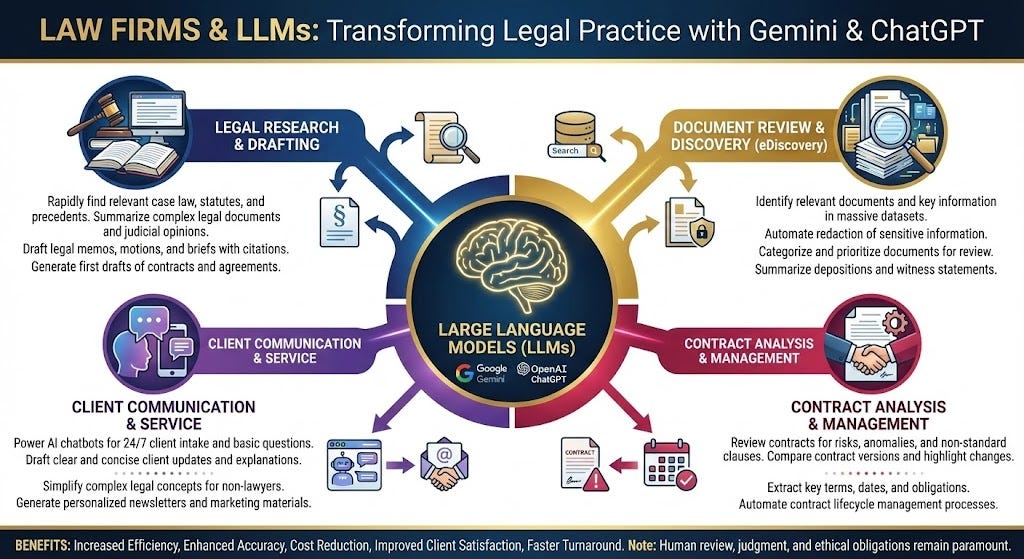

Create an infographic that shows how actuaries can use LLMs like Gemini 3 or ChatGPT 5.1. Then, do private equity and family law firms

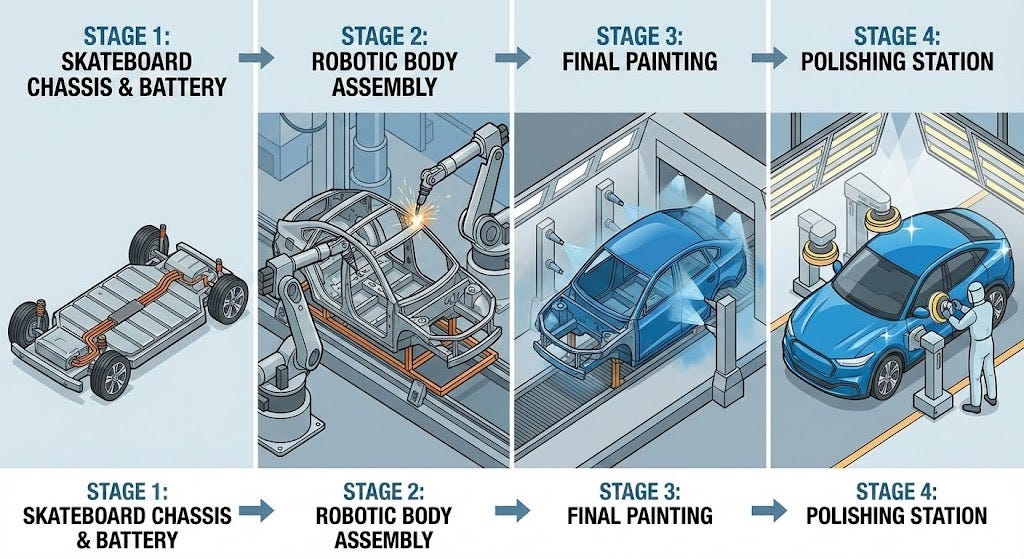

In an illustration, describe the assembly stages of an electric vehicle, showing the progression from the bare skateboard chassis and battery pack, through the robotic arm assembly of the body, to the final painting and polishing station.

Create a hyper realistic image of a St. Bernard with a barrel under his neck with the word “Magnus” etched into it

I’ve uploaded my LinkedIn profile. Create an infographic of my career in the style of robots and rockets

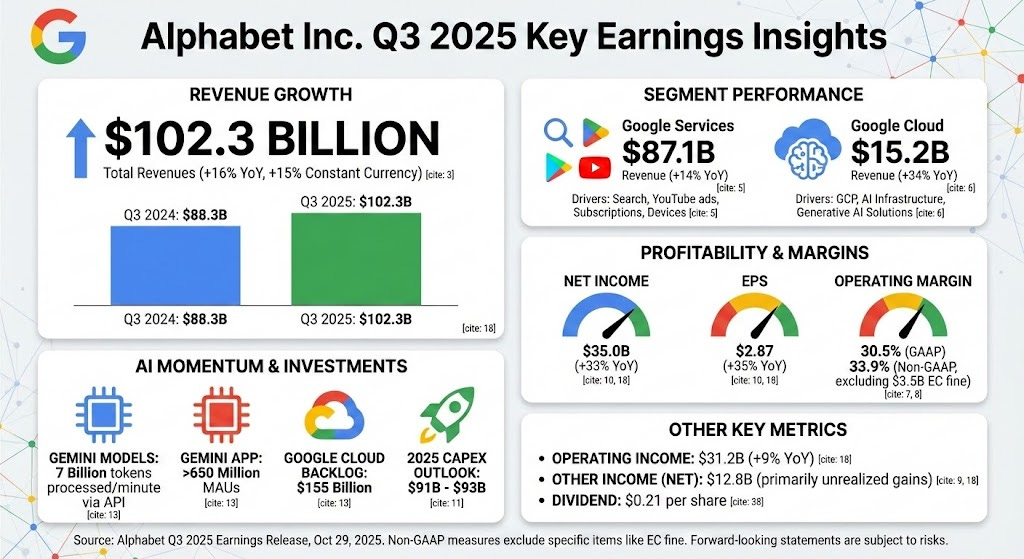

I’ve uploaded Google’s latest earnings. Create an infographic highlighting key insights from the earnings

Create an image at the location of 40°17′47″N 74°52′10″W on the evening of December 25, 1776

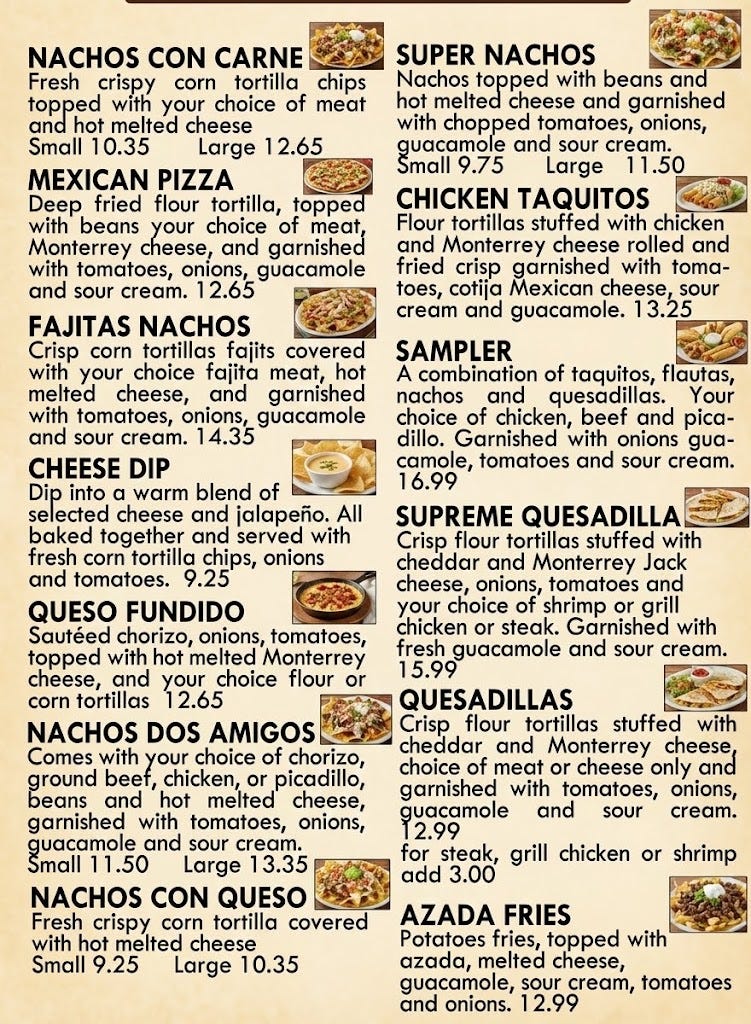

This is the appetizer menu from one of my favorite local Mexican restaurants. Recreate it and put images next to each description:

Risks and Trade-offs

It’s not perfect.

Cost: This isn’t free. The “Thinking” mode burns compute. The API pricing is around 24 cents per 4K image. That adds up if you have a team of ten people hitting “generate” all day.

Speed: It’s slower than the “dumb” models. You have to wait about 15-20 seconds for the reasoning step.

Safety Filters: It is extremely guarded. If you try to generate anything even remotely edging on copyright infringement of major characters (like Mickey Mouse) or unsafe content, it shuts down hard.

What to Do Monday

You don’t need a strategy meeting for this. You just need to get hands on keyboards.

Log in to Gemini Advanced (or Workspace). Verify you have the “Nano Banana” / Gemini 3 Image update.

Run the “Brand Consistency” Test. Take your company logo and a product shot. Ask it to place your product in five different environments (a desk, a beach, a bag). See if it hallucinates.

Check the Text capability. Ask it to generate a social post image that includes your headline text inside the image.

Brief the Creative Team. Show them this isn’t about replacing them; it’s about skipping the drafting phase. They can now start at “Good” and move to “Great.”

The nickname “Nano Banana” is silly. The tech is serious.

We just moved from AI that draws to AI that designs. There is a difference. There are so many new, incredible use-cases showing up online every hour of every day right now. The integration into NotebookLM is also just incredible. Go research, experiment, and try this tool out - you will be astonished. It truly feels like there are no limits to what this tool can do.

I write these pieces for one reason. Most leaders do not need another ranking of image models, they need someone who will sit next to them, look at where visuals and decks and diagrams actually slow work down, and say, “Here is where this new image model belongs, here is where your designers and existing tools should still lead, and here is how we keep all of it safe, compliant, and on brand.”

If you want help sorting that out for your company, reply to this or email me at steve@intelligencebyintent.com. Tell me what you sell, who you are trying to reach, and where your team is stuck waiting on assets or slides. I will tell you what I would test first, which part of the Nano Banana Pro and Gemini stack I would put on it, and whether it even makes sense for us to do anything beyond that first experiment.