Google's Gemini Likely Just Crossed 1 Quadrillion Tokens Processed Per Month: Here's Why Your Brain Can't Comprehend That Number

From 9.7 trillion to over 1,000 trillion in just 16 months, the AI revolution is accelerating faster than we can measure

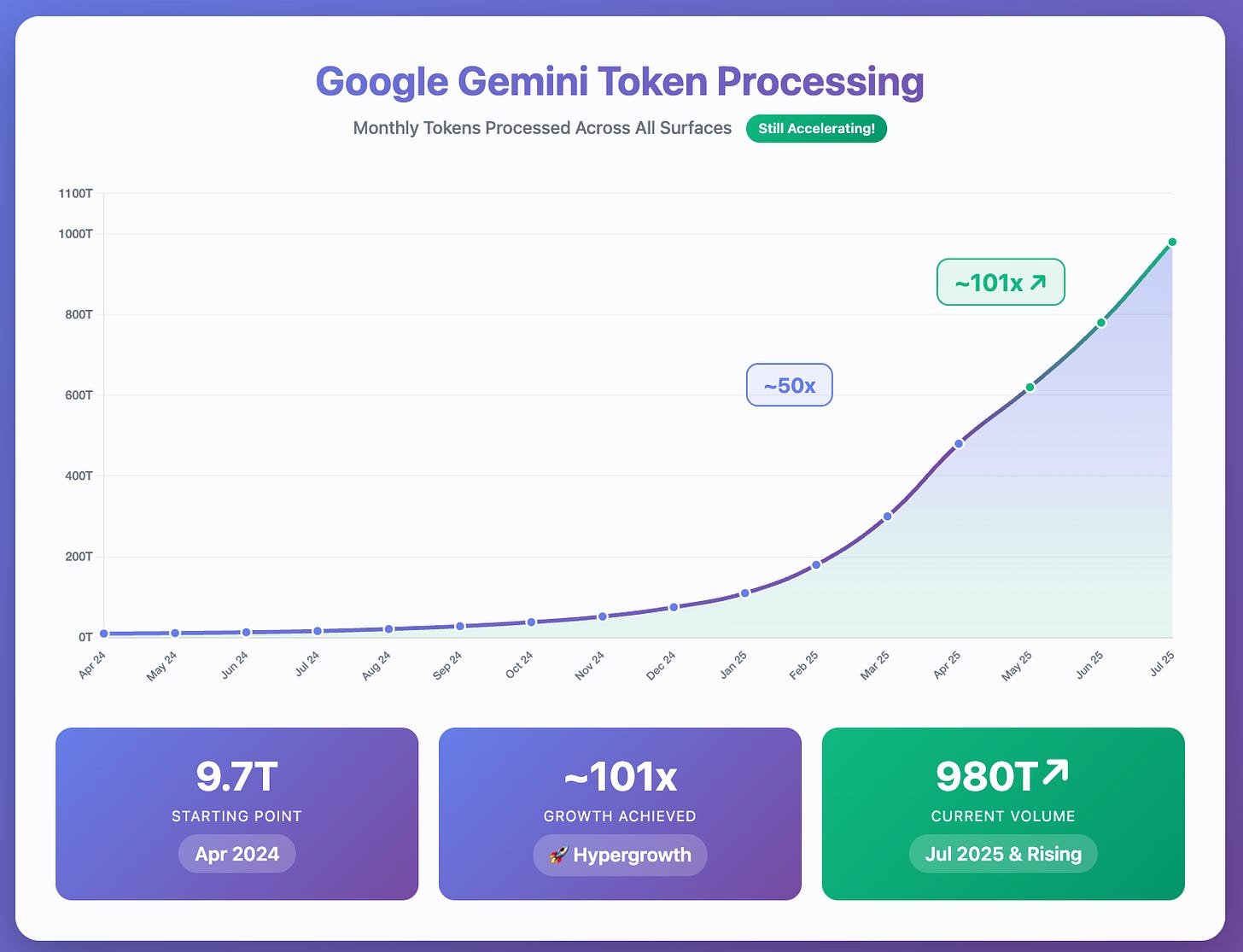

Every now and then, you come across a statistic that is so eye-opening that it makes you pause and think deeply. This happened to me earlier this year at Google's I/O event in April, when they announced that the Gemini models were now processing 480 trillion tokens per month. This was up from 9.7 trillion tokens just one year before. This was a 50x increase in twelve months.

But wait, what exactly is a token? Let me break it down in a way that makes sense.

Think of tokens as the way AI models "chew up" text into bite-sized pieces they can digest and process. Just like we read words letter by letter and word by word, AI models read text token by token. It's their fundamental unit of understanding language.

Here's where it gets interesting: tokens aren't always full words. Sometimes they are – simple words like "cat" or "run" are typically one token each. But longer or more complex words might get split up. The word "understanding," for instance, might be broken into three tokens: "under" + "stand" + "ing". Even punctuation marks and spaces count as tokens.

So, how do tokens relate to the words we use every day? A handy rule of thumb is that 100 tokens equals roughly 75 words. Put another way, each token represents about three-quarters of a word on average.

When Google showed the chart at I/O that had that 480 trillion number, I knew it was huge, but I didn't put it into a context that made sense. I just knew it was big. However, during the earnings call held back in July, Sundar announced that between April and July, the number of tokens that Gemini was processing had more than doubled. They were now processing 980 trillion tokens per month. I'm not sure I've ever personally seen anything accelerate that quickly.

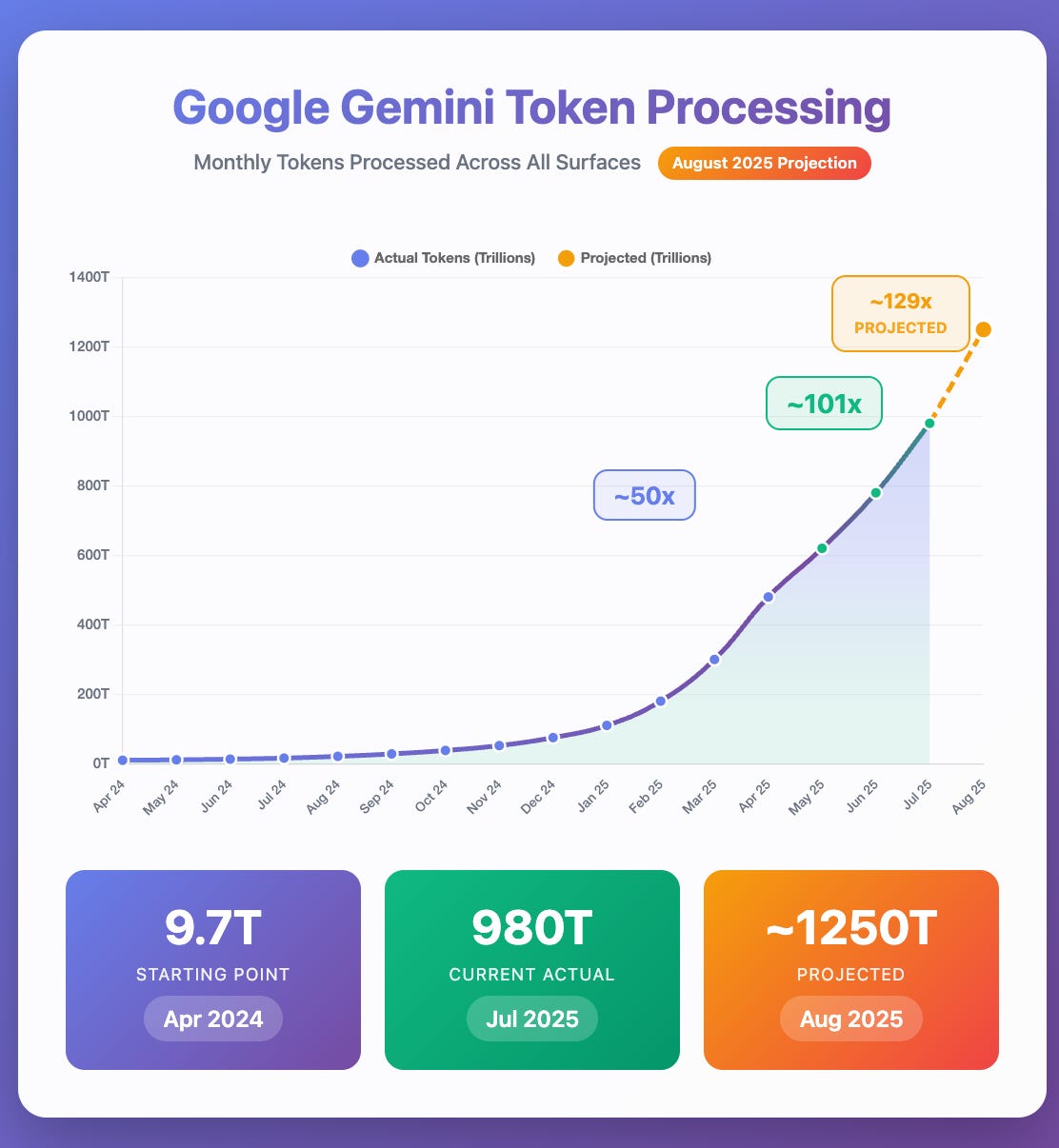

Given the timing of that announcement and the rapid growth in Gemini usage, it is now likely that Google's Gemini is processing well over one quadrillion tokens per month. I asked Claude to look at the current assumptions on growth rates and estimate where it thinks the end of August will be. And you can see that in this chart here. Google’s Gemini could be somewhere in the neighborhood of processing 1.25 quadrillion tokens per month. Wow!

I was giving an overview presentation on AI to a new client this week, and I decided to reference the fact that the Gemini models have now likely passed one quadrillion monthly tokens this month. I wanted to put that into context and explain it in a way that made sense to everyone.

Here's how I explained it:

1 quadrillion tokens ≈ 750 trillion words (using the typical ratio of ~1.33 tokens per word). In book pages: That's approximately 3 trillion pages (assuming 250-300 words per page, typical for a novel)

The Library of Everything: If you printed all this text into books (300 pages each), you'd have 10 billion books. That's more than one book for every person on Earth, with enough left over to give everyone in China a second copy. The Library of Congress has approximately 17 million books - this would be equivalent to 588 Libraries of the same size.

The Human Writing Marathon: If every person on Earth (8 billion people) typed continuously at 40 words per minute for 8 hours a day, it would take them almost 5 days of synchronized global writing to produce this much text.

A Galaxy of Information: The Milky Way galaxy is estimated to contain between 100 billion and 400 billion stars. If each of the 750 trillion words processed by Gemini in a month were a star, you could create thousands of galaxies the size of our own.

Outnumbering the Cells in the Human Body: The average adult human body is composed of an estimated 30 to 40 trillion cells. The number of words processed by Gemini monthly is more than 20 times the number of cells in a single human being.

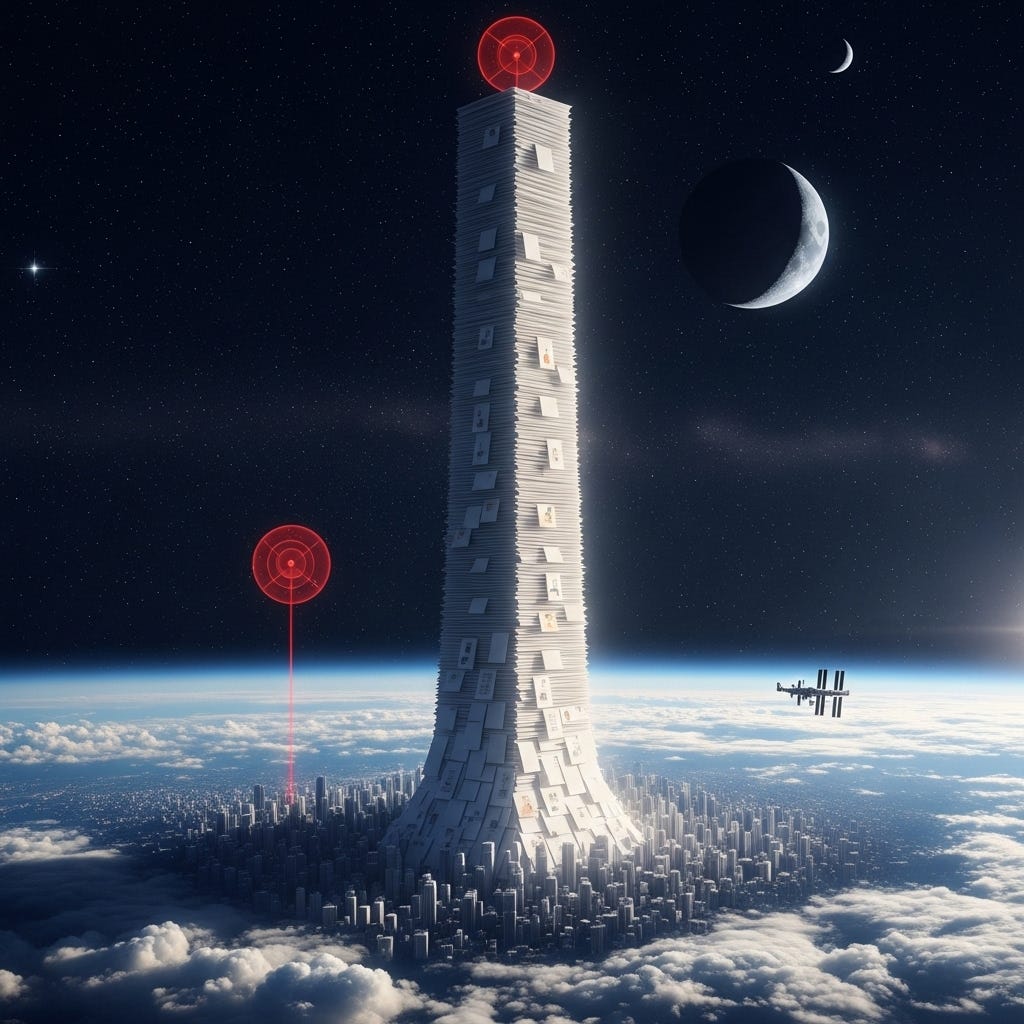

The Tower to the Moon (and Then Some): If you printed all one quadrillion tokens onto paper - 300 words per page, standard 0.10 mm office-paper thickness - the stack would climb roughly 155,000 miles (≈ 250,000 km), about two-thirds of the way to the Moon. It would loom ≈ 610 times higher than the International Space Station and ≈ 2,500 times taller than the "edge of space" (100 km), easily triggering NASA's worry radar. It wouldn't just scrape the sky - it would whisk you on a paper elevator through the stratosphere, past orbit, and into the cold, dark void.

These are mind-boggling numbers, and the trend is only accelerating. This is just what Google's Gemini is processing; it does not account for OpenAI, Anthropic, xAI, or any of the numerous Chinese open-source providers. The world has changed, and that change is just beginning.

We're just at the beginning of this massive AI transformation. I just found this incredibly interesting and thought that all of you would too.

Thanks for reading. If you enjoyed this and are not a subscriber, please subscribe. If you found this interesting, please share it with your friends.

If you have a moment, please take a look at my updated website at https://intelligencebyintent.com/ - I would love to hear your thoughts/feedback!

Quick production note: the charts were all created with the new Claude Opus 4.1 model in the artifact window. The final diagram of the “paper stack” image was created by Google’s Imagen 4 Ultra model in AI Studio.