OpenAI's o3 and o4-mini: Pushing the Boundaries of AI Reasoning

OpenAI's o3 can read a 300-page book and write a 150-page analysis in response. My human colleagues still haven't finished reading the 5-page memo I sent last week.

The AI landscape shifted again yesterday as OpenAI released two new powerhouse models – o3 and o4-mini. As someone tracking the evolution of AI capabilities for enterprise applications, I spent the day putting these models through their paces. What I found was both impressive and nuanced, with implications that every business leader should understand.

The o-Series Evolution: More Than Just Chat

When OpenAI first announced the o-series earlier this year, it signaled a deliberate shift from conversational AI to reasoning-focused models. Yesterday's release represents the culmination of that vision – models specifically engineered to think, reason, and solve complex problems.

The new models bring several capabilities that caught my attention:

1. Native Tool Integration

Both o3 and o4-mini can seamlessly integrate with external tools without requiring complex workflows or third-party frameworks. This means they can autonomously use web browsers, execute Python code, process images, and even generate visuals – all as part of their reasoning process.

I tested this by asking o3 to analyze some quarterly financial data, create visualizations, and then use those visualizations to make predictions. The model smoothly transitioned between data analysis, code execution, and insight generation without any awkward handoffs. This represents a significant leap forward for enterprises that have struggled with the fragmented AI tool landscape.

2. "Thinking with Images"

Perhaps the most fascinating innovation is the models' ability to incorporate visual reasoning directly into their thought processes. These aren't just models that can caption images – they can actively manipulate, transform, and reason through visual data.

In one test, I gave o4-mini a diagram of a client company's somewhat convoluted approval process. It understood the workflow and identified bottlenecks, suggested optimizations, and even redrew portions of the diagram to illustrate its recommendations. The seamless blending of visual and logical reasoning feels genuinely new.

3. Expanded Context and Output Capacity

Both models support a 200,000-token context window and can generate up to 100,000 tokens in a single response. To put that in perspective, that's roughly the equivalent of ingesting a 300-page book and then writing a detailed 150-page analysis in response.

This expanded capacity for businesses dealing with complex documentation, legal contracts, or extensive datasets eliminates many of the context limitations that previously forced uncomfortable compromises.

Benchmark Performance: Raising the Bar

Numbers tell part of the story, and the benchmarks are undeniably impressive. On the American Invitational Mathematics Examination (AIME) 2024 benchmarks, o3 achieved 91.6% accuracy while o4-mini reached 93.4%, outperforming previous state-of-the-art models. These weren't cherry-picked tests; the models demonstrated similar dominance across scientific reasoning, coding, and multimodal understanding.

In software engineering tests, o3 reached 69.1% on SWE-bench Verified, setting a new high-water mark for code-related tasks. The multimodal benchmarks were equally impressive, with o3 scoring 82.9% on MMMU and 87.5% on MathVista, demonstrating its ability to combine visual and mathematical reasoning.

These benchmarks are particularly remarkable because they reflect real-world problem-solving capabilities rather than abstract language modeling metrics. We're seeing AI that can tackle graduate-level physics problems, debug complex code bases, and solve visual-mathematical challenges at levels approaching human expert performance.

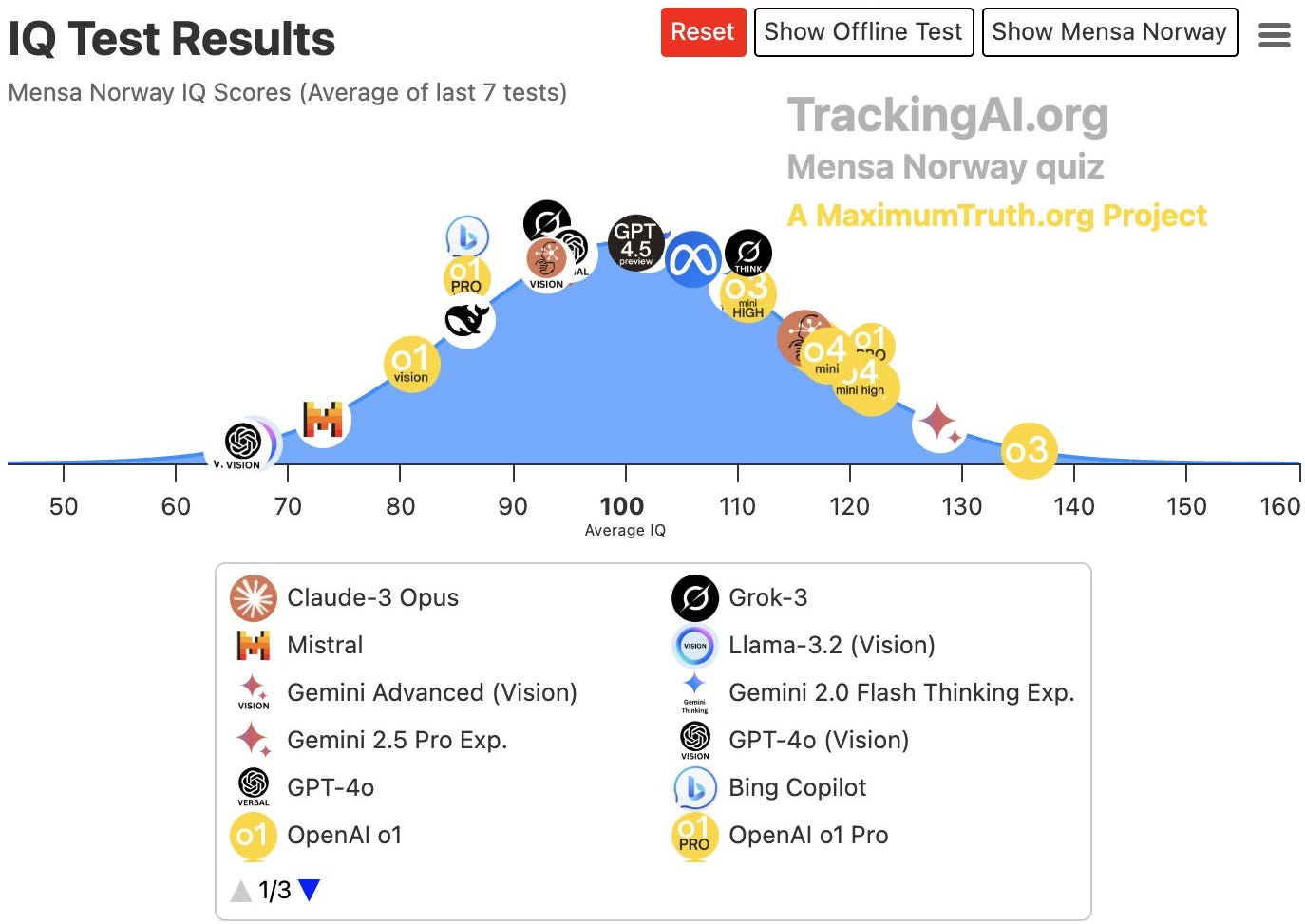

I’ll post more benchmark results next week as everyone is still updating the tables - but I will share one here. o3 scored 136 on the Norway Mensa test - passing Gemini 2.5 Pro. The average human score is 100, and a score of 120 is the top 10% of humans. That score of 136 puts it in the top 1%.

o3 vs. o4-mini: Performance and Practicality

The most interesting aspect of this dual release is the relationship between the two models. While o3 represents OpenAI's most advanced reasoning system, o4-mini delivers approximately 90% of o3's performance at less than one-ninth the cost.

The pricing difference is substantial:

o3: $10 per million input tokens, $40 per million output tokens

o4-mini: $1.10 per million input tokens, $4.40 per million output tokens

For many practical business applications, o4-mini will likely be the sweet spot. In my testing across several business scenarios – financial analysis, market research, and technical documentation – I found o4-mini's reasoning capabilities more than sufficient, with the cost savings and speed improvements making it the more practical choice for daily operations.

Most enterprises will adopt a tiered approach: o4-mini for routine analytical tasks and development work, reserving o3 for only the most complex reasoning challenges where that marginal performance difference justifies the cost.

The Real Innovation: Autonomous Problem-Solving

Looking beyond the specifications and benchmarks, what's truly groundbreaking about these models is how they approach problem-solving. Rather than simply providing answers, they actively work through problems – pausing to think, exploring different approaches, and integrating multiple forms of reasoning.

I tested using o3 to help analyze a complex product development challenge, and the difference from previous models is stark. When faced with a multi-faceted problem involving technical constraints, market considerations, and resource limitations, o3 doesn't just generate plausible-sounding responses – it methodically breaks down the problem, considers different angles, and builds toward a solution that integrates all relevant factors.

This "thinking in public" approach makes the models' reasoning transparent and trustworthy. You can follow their logical process, catch potential errors, and understand the basis for recommendations. This transparency is invaluable for business leaders who need to justify AI-informed decisions.

The Bigger Picture: What This Means for Enterprise AI

OpenAI's new releases reflect a maturing AI landscape where models are increasingly specialized for particular use cases. The o-series is clearly positioned as the reasoning and problem-solving counterpart to GPT's conversational strengths, with both likely to be unified in the forthcoming GPT-5.

For businesses, this specialization creates both opportunities and challenges. The increased capabilities enable new applications in research and development, complex decision support, and specialized knowledge work. However, the proliferation of models with different strengths also increases the complexity of building an effective AI strategy.

Organizations will need to develop clearer frameworks for matching AI capabilities to business problems, considering factors like:

The complexity and depth of reasoning required

Cost-performance tradeoffs for different use cases

Integration requirements with existing systems and workflows

The balance between autonomy and human oversight

A Balanced Perspective: The Pareto Frontier

While OpenAI's new models are undeniably impressive, maintaining perspective is essential. Despite leading in many benchmarks, o3 is not currently on the Pareto frontier for all AI capabilities because of its cost (it looks like o4-mini is). Google's Gemini 2.5 Pro still maintains significant advantages in certain areas, most notably its massive 1-million token context window (compared to o3's 200,000).

This expanded context allows Gemini to process approximately 1,500 pages of text or 30,000 lines of code in a single run – a substantial advantage for applications involving entire codebases, comprehensive documentation sets, or extensive research materials. Gemini 2.5 Pro also offers broader multimodal capabilities, including native support for audio and video inputs alongside text and images.

Cost considerations also favor Gemini 2.5 Pro for some use cases, with pricing around $1.25 per million input tokens and $10 per million output tokens – significantly lower than o3's rates, though comparable to o4-mini.

Looking Forward: Strategic Implications

As the AI landscape continues its rapid evolution, the real winners will be organizations that develop nuanced strategies for leveraging different models' strengths. Rather than viewing this as a horse race with a single winner, forward-thinking businesses are building flexible AI architectures that can adapt to emerging capabilities.

The o3 and o4-mini release represent another significant step toward AI systems that can reason, problem-solve, and integrate multiple modalities in ways that genuinely augment human capabilities. For business leaders, the implications are profound – these tools aren't just automating routine tasks but are increasingly capable of supporting complex cognitive work that was previously the exclusive domain of human experts.

The challenge now is technical implementation and strategic integration – understanding how these new capabilities can transform business processes, enhance decision-making, and create competitive advantages. Those who approach this opportunity thoughtfully will find themselves with powerful new tools for innovation and growth.

Steve has worked with hundreds of companies to understand and adopt AI in their organizations. Need a comprehensive AI workshop for your organization? Want highly customized, personal AI training? Grab a 15-minute slot on my calendar to discuss: https://calendar.app.google/3ctoTDUgtg71TQDG7