The Research Revolution: How AI is Rewriting the Rules of Knowledge Work

The Algorithm as Apprentice: When Your Research Assistant is an AI

Introduction: Beyond the Algorithm – The Rise of the AI Research Partner

We live in an age defined by information overload. The ability to sift through the digital deluge and synthesize disparate data points into coherent understanding has become a critical skill and a competitive advantage in nearly every field. We've relied on search engines for decades – powerful tools, certainly, but ultimately limited. They deliver potential answers; the real work of analysis, synthesis, and judgment remains firmly in human hands.

But that paradigm is shifting. A new generation of AI-powered research tools, emerging from the labs of Google and OpenAI, promises to alter the landscape of knowledge work fundamentally. These aren't mere incremental upgrades to existing search technology. They represent a qualitative leap towards AI that doesn't just find information but actively reasons with it. This article will explore these nascent "Deep Research" capabilities, dissect their differences, and examine their potential to reshape industries, redefine expertise, and even alter the very nature of discovery.

Part 1: Deep Research – From Search to Synthesis

The limitations of traditional search are well-known. You enter a query, and an algorithm returns a ranked list of links. The user is left to navigate a fragmented web of information, often struggling to separate signal from noise. Deep Research aims to transcend this model. It's about moving from finding information to understanding it.

Both Google's and OpenAI's offerings share a common ambition: to leverage the power of large language models (LLMs) and other AI techniques to:

Comprehend Complexity: These tools are designed to handle nuanced, multi-faceted questions that defy simple keyword searches. They can grapple with ambiguity and interpret intent.

Integrate Disparate Sources: They don't just retrieve individual web pages; they draw insights from a vast corpus of information, including articles, reports, datasets, and even (in OpenAI's case) uploaded files.

Synthesize and Analyze: This is the crucial difference. They don't simply present raw data; they identify patterns, reconcile conflicting information, draw inferences, and construct coherent narratives.

Generate Structured Outputs: The result isn't a list of links; it's a well-organized report, complete with citations, summaries, and often, data visualizations.

Exhibit Agentic Reasoning: These systems display a degree of autonomy, making decisions about search strategy, adapting to new information, and even acknowledging uncertainties.

Part 2: OpenAI's Autonomous Agent (Deep research) – A Digital Research Assistant

OpenAI's approach, currently available to ChatGPT Pro subscribers (at a premium price of $200/month), leans heavily into the concept of an "autonomous research agent." Powered by a specialized version of their forthcoming "o3" model, it's designed to operate independently, much like a highly skilled (and tireless) research assistant.

Key Differentiators:

True Agency: This is the defining feature. You provide a prompt – a research question, a problem to solve – and the system takes over, often working for extended periods (up to 30 minutes) to produce a comprehensive report.

Iterative Reasoning: It breaks down complex tasks into smaller, manageable steps, conducting multiple searches, analyzing results, and refining its approach as it goes.

Contextual Understanding: The ability to upload files – PDFs, spreadsheets, and even images – allows users to provide rich context, grounding the research in specific datasets or documents. This is a significant advantage.

Transparency and Auditability: The system provides detailed citations and, crucially, a summary of its reasoning process. This "chain of thought" transparency is essential for building trust and verifying the validity of the findings.

Ideal Applications:

Competitive Intelligence: Deep dives into competitor strategies, product offerings, and financial performance, going far beyond surface-level analysis.

Scientific Literature Reviews: Rapidly synthesizing findings from a vast body of academic Research, identifying key trends, and accelerating the pace of scientific inquiry.

Due Diligence and Financial Analysis: Supporting investment decisions with comprehensive reports, identifying risks and opportunities, and uncovering hidden patterns.

Legal Research: Navigating the complexities of case law, statutes, and regulations, providing a more nuanced understanding of legal precedents.

Bespoke Research Projects: Tailoring Research to highly specific needs, leveraging the system's ability to adapt and learn.

Part 3: Google's Integrated Approach – The Power of the Ecosystem

Google's Deep Research, accessible through Gemini Advanced (at a more accessible $20/month), takes a subtly different tack. It's positioned as a "personal AI research assistant," deeply integrated within the familiar Google ecosystem.

Key Features:

Structured Research Plans: Before embarking on the Research, Gemini outlines its proposed approach, allowing users to review and modify the plan. This provides a degree of control, albeit at the potential cost of some flexibility.

Google Ecosystem Synergy: The tight integration with Google Docs and other Workspace tools makes it a natural fit for users already invested in Google's productivity suite.

Real-Time Data Advantage: Leveraging the power of Google Search, it can access up-to-the-minute information, a potential edge over OpenAI's system, which can sometimes lag in rapidly evolving domains.

Speed and Efficiency: Generally faster than OpenAI's offering, it's designed for situations where rapid insights are paramount.

Broad Accessibility: The lower price point makes it accessible to a much wider range of users, from students to small business owners.

Best Use Cases:

Market Trend Analysis: Quickly identifying emerging trends, consumer sentiment shifts, and evolving market dynamics.

Content Strategy and Ideation: Supporting content creators with research-backed insights, identifying relevant topics, and informing editorial decisions.

Academic Research Support: Assisting students and educators with background research, literature searches, and data gathering.

Small Business Intelligence: Providing entrepreneurs with valuable data on competitors, market opportunities, and potential locations.

Rapid Summarization: Delivering concise overviews of complex topics, ideal for time-constrained professionals.

Part 4: A Comparative Analysis – Choosing the Right Tool for the Task

I was researching the history of Apple II software piracy in the early years (1977-1985). I decided to ask both OpenAI and Google Deep Research to research the topic. This was the exact query I used with both of them:

I'm researching the history of software piracy in the Apple II era (1977-1985). Please find information about:

The major software cracking groups and their members, particularly in North America and Europe

Technical documentation about copy protection methods used by companies like Sierra Online, Broderbund, and Electronic Arts

Contemporary magazine articles and BBS posts discussing cracking techniques

Documentation of "crack screens" and the artistic/cultural elements of the cracking scene

Interviews or firsthand accounts from former crackers who are now willing to discuss that period

Court cases and legal actions against major software pirates during this period

The evolution of copy protection technology from early disk protection to physical dongles and manual-based schemes

The role of user groups and computer clubs in software distribution

Technical specifications of tools like Locksmith, Copy II Plus, and hardware copying devices

Primary sources from the period: newsletters, disk magazines, and BBS archives

Focus on verifiable sources like

Computer magazine archives (Softalk, Compute!, BYTE)

Legal records and court documents

Software preservation archives

Academic papers on early software piracy

Oral histories and documented interviews

Technical documentation from the period

User group newsletters and meeting minutes

Google's Deep Research created a summary document with 2,506 words and referenced 18 different sources.

OpenAI's Deep research created a summary document with 8,406 words and referenced 13 sources.

The results are too long to paste into the article here, so I've created a link where you can view the files here:

One thing I've noticed is that when you copy and paste the results out of ChatGPT, sometimes the formatting around source notes gets wonky. I did not clean it up - just letting you know in case you see a couple of odd artifacts.

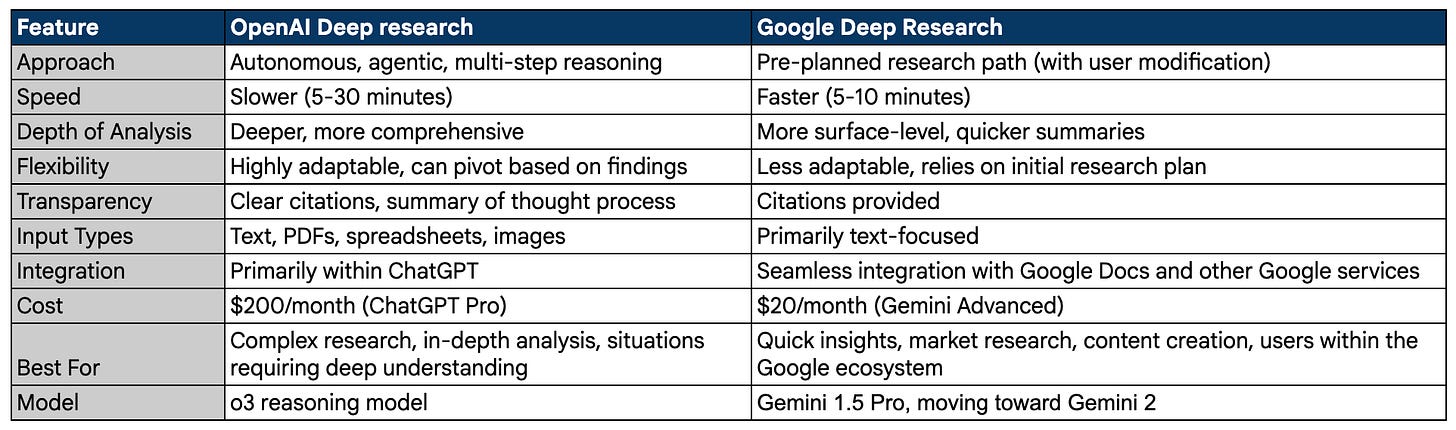

Here is a good summary comparing the two tools:

Part 5: The Broader Implications – A New Era of Knowledge Work

The advent of these Deep Research tools has implications that extend far beyond specific industries or use cases:

The Democratization of Expertise: By automating much of the drudgery of Research, these tools make in-depth analysis accessible to a wider audience, potentially leveling the playing field between experts and non-experts.

The Reconfiguration of Labor: They will likely shift the focus of knowledge work, freeing human researchers to concentrate on higher-order tasks such as critical thinking, creative problem-solving, and strategic interpretation.

The Acceleration of Discovery: By automating the process of literature review and data analysis, they could significantly accelerate the pace of scientific and technological innovation.

The Evolution of Search Itself: These tools represent a potential shift from a paradigm of searching for information to one of receiving synthesized, actionable knowledge.

New modes of human and technology partnership: The introduction of these tools requires human and AI teams to collaborate effectively.

Challenges and Ethical Considerations:

The transformative potential of these tools is undeniable, but it's also crucial to acknowledge the challenges:

The Problem of Bias and Accuracy: AI-generated Research is only as good as the data it's trained on. Biases in the training data can lead to skewed or misleading results. Rigorous validation and critical evaluation remain essential.

The Risk of Over-Reliance: There's a danger of becoming overly dependent on AI-generated insights, potentially eroding critical thinking skills and leading to a passive acceptance of machine-generated conclusions.

The Ethical Quandaries: Questions surrounding the ownership of AI-generated knowledge, the potential for misuse, and the impact on employment need careful consideration and proactive solutions.

Data security: These tools must follow an organization's data security, compliance, and privacy guidelines.

Conclusion: Navigating the Inflection Point

Google and OpenAI's Deep Research capabilities are not just technological advancements; they represent a fundamental shift in how we interact with information. They offer a glimpse of a future where AI becomes a true partner in the pursuit of knowledge, augmenting human intelligence and accelerating the pace of discovery. As with any disruptive technology, navigating this inflection point will require careful attention to both the opportunities and the challenges. The journey from mere search to genuine, AI-powered Research has begun, and its consequences will be far-reaching.

Here’s a current picture of Magnus (I know some of you only read this for updates on my St. Bernard adventures!). He’s 50 pounds now and 17 weeks old - so amazing! Here are two recent pictures

As always, I’d love to hear from you. I’ve been conducting many AI workshops and training sessions nationwide to help companies understand the ‘art of the possible’ regarding how AI can help their business. If this is of interest, please reach out (steve@revopz.net).

Best,

Steve